Pathology Image Analysis Laboratory

Representation learning

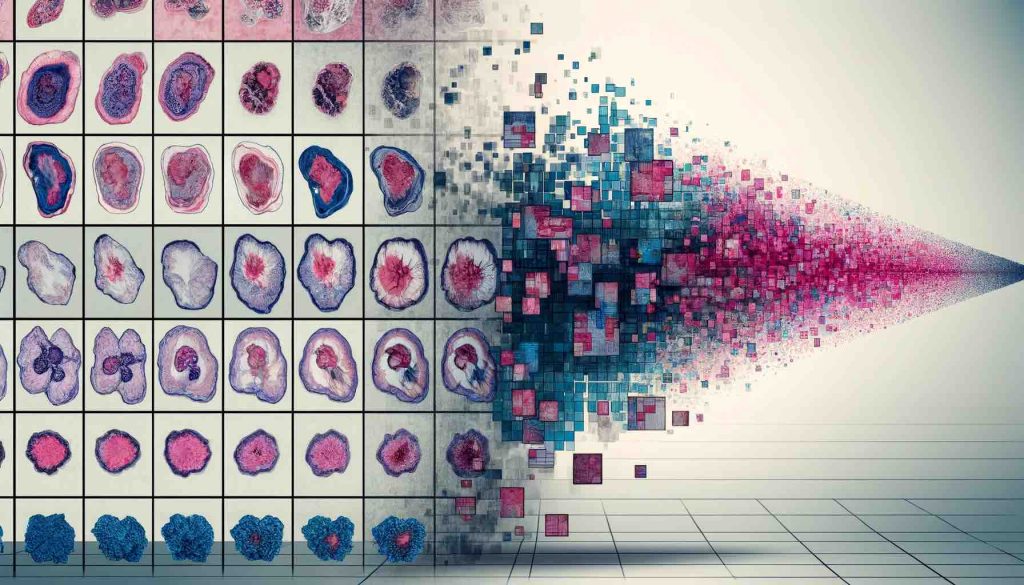

Computational Pathology fundamentally hinges on our ability to learn and distill deep representations from histopathology images. Crafting these universal, domain- and task-agnostic representations is pivotal to address challenges such as data scarcity, minimizing intra- and inter-observer variability, and reducing the need of labor-intensive annotations. Our research harnesses the power of Self-Supervised Learning (SSL) to train large-scale foundation models to build histology representations. By utilizing cutting-edge models, we build vision encoders of histology regions of interest that we further to giga-pixel whole-slide images for slide representation learning. Further enriching our model, we develop strategies for multimodal pretraining that weave together expression data and textual information, crafting a multidimensional understanding of pathology data.

Computational Pathology fundamentally hinges on our ability to learn and distill deep representations from histopathology images. Crafting these universal, domain- and task-agnostic representations is pivotal to address challenges such as data scarcity, minimizing intra- and inter-observer variability, and reducing the need of labor-intensive annotations. Our research harnesses the power of Self-Supervised Learning (SSL) to train large-scale foundation models to build histology representations. By utilizing cutting-edge models, we build vision encoders of histology regions of interest that we further to giga-pixel whole-slide images for slide representation learning. Further enriching our model, we develop strategies for multimodal pretraining that weave together expression data and textual information, crafting a multidimensional understanding of pathology data.

- Key Publications

Joint Vision-Language Learning

Natural language data contain rich information about cell and tissue morphology, their interpretation in the context of diagnosis, prognosis as well as patient management. When paired with corresponding histology images, the image captions have the potential to offer strong signals of supervision beyond the simple discrete class labels used in supervised learning or different views of semantic preserving transformations used in self-supervised contrastive learning. Our research aims to leverage both vision only, text only as well as paired vision language data for representation learning (both unimodal and multimodal) and developing multimodal models specialized for visual language understanding in pathology

Natural language data contain rich information about cell and tissue morphology, their interpretation in the context of diagnosis, prognosis as well as patient management. When paired with corresponding histology images, the image captions have the potential to offer strong signals of supervision beyond the simple discrete class labels used in supervised learning or different views of semantic preserving transformations used in self-supervised contrastive learning. Our research aims to leverage both vision only, text only as well as paired vision language data for representation learning (both unimodal and multimodal) and developing multimodal models specialized for visual language understanding in pathology

- Key Publications:

3D pathology

Despite human tissues inherently being three-dimensional (3D) in structure, the prevailing diagnostic methodology has relied on the analysis of thin, two-dimensional (2D) tissue sections placed on glass slides. This 2D tissue sampling captures merely a fraction of the morphological complexity present in the full 3D tissue. Our research aims to close this gap by developing a 3D computational framework based on 3D state-of-the-art deep learning technologies, with the primary goal of achieving better diagnostic and prognostic performance than current clinical practice. We accomplish this by explicitly encoding 3D morphology and aggregating heterogeneous morphology from the entire tissue volume.

- Key Publications

- Song, Andrew H., et al. “Weakly Supervised AI for Efficient Analysis of 3D Pathology Samples.” ArXiv (2023). [link]

Multimodal data fusion

While WSIs already provide detailed descriptions of the patient status through rich morphological cues, it has become clear that the cancer progression and responses to therapeutic regimens are governed by a multitude of factors, warranting incorporation of additional modalities for improved outcome prediction. Omics data, such as gene expression and mutations, presents a natural choice as it can provide comprehensive tissue molecular details, which may or may not be reflected in morphology, and can be obtained within routine clinical workflow. Leveraging latest developments in multimodal fusion strategies, we develop frameworks that use complementary information in histology and genomics for better patient prognosis.

- Key Publications

- Chen, Richard J., et al. “Pan-cancer integrative histology-genomic analysis via multimodal deep learning.” Cancer Cell (2022): 865-878. [link]

- Chen, Richard J., et al. “Multimodal co-attention transformer for survival prediction in gigapixel whole slide images.” Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 2021. [link]

- Chen, Richard J., et al. “Pathomic fusion: an integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis.” IEEE Transactions on Medical Imaging (2020): 757-770. [link]

Weakly Supervised Learning and Applications

Advances in scanning systems, imaging technologies and storage devices are generating an ever-increasing volume of whole-slide images (WSIs) acquired in clinical facilities, which can be computationally analyzed using artificial intelligence (AI) and deep learning technologies. The digitization and automation of clinical pathology, also referred to as computational pathology (CPath), can provide patients and clinicians the means for more objective diagnoses and prognoses, allows the discovery of novel biomarkers and can help to predict response to therapy. Our research uses the latest developments in Multiple instance learning (MIL) to achieve superior performance without subject to inter-observer variability in various diagnostic tasks.

- Publications

- Lipkova, Jana, et al. “Deep learning-enabled assessment of cardiac allograft rejection from endomyocardial biopsies.” Nature medicine (2022): 575-582. [link]

- Lu, Ming Y., et al. “AI-based pathology predicts origins for cancers of unknown primary.” Nature 7861 (2021): 106-110. [link]

- Lu, Ming Y., et al. “Data-efficient and weakly supervised computational pathology on whole-slide images.” Nature biomedical engineering (2021): 555-570. [link]

Multiplex Imaging

Multiplex imaging technologies provide a unique capability to delve into the intricate biological interactions unfolding within tissues, surpassing the limitations of conventional tissue microscopy (histology). In this context, our research is centered on the development of innovative machine learning-based computational methods designed to tackle various challenges, including cell phenotyping and unraveling the complexities of the tumor microenvironment, leveraging the rich information contained within multiplex images.